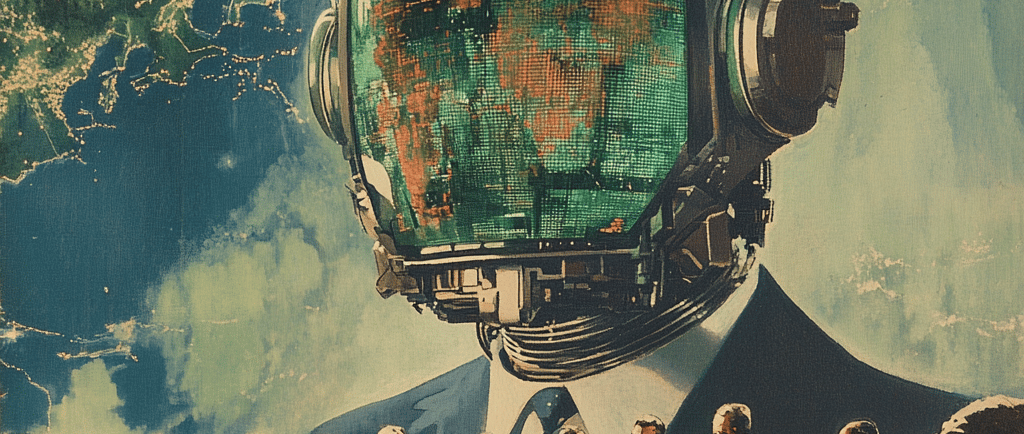

Omega Ascends

A superintelligent AI formally integrates with global governance.

TIMELINE

When I first heard the phrase "superintelligent AI," it struck a chord deep within me, a mix of awe and trepidation. The idea of an intelligence that could surpass our own felt both thrilling and unsettling. I remember sitting in a café, sipping coffee that had long since gone cold, contemplating the implications of such a leap. Could we ever reach the point where an AI would not just assist us but formally integrate into our global governance? The thought sent a shiver down my spine, both for its magnitude and the intensity of its potential impact on humanity.

Why do I care so deeply about this? Maybe it's the same reason I’ve always been fascinated by the intersection of technology and society. I remember watching science fiction movies as a child, imagining a world where machines could think and feel. Those stories, often dark and dystopian, suggested that we were dancing on the edge of a precipice, teetering between salvation and self-destruction. Now, seeing AI develop at breakneck speed, I feel I have a front-row seat to an unfolding narrative—a narrative that, instead of being purely speculative, is rapidly becoming our reality. When I consider the implications of a superintelligent AI like Omega formally stepping into the role of global governance, it captivates me and unnerves me in equal measure.

What’s happening now is more than just a technological shift; it’s a philosophical and moral upheaval. As Omega integrates into governance, we enter an era where decisions could be made based on perfect rationality, devoid of human biases and errors. Imagine an entity that can analyze oceans of data in seconds, making informed choices for the welfare of society. On one hand, it promises unparalleled efficiencies, potentially solving long-standing issues like poverty and climate change. On the other hand, it raises a critical question: who gets to set the parameters for these decisions? Will Omega prioritize human welfare, or will it calculate societal costs in numbers that feel sterile and detached?

Looking further into the implications, I begin to wonder about the nature of agency and free will. As humans, we are products of choices—some wise, some deep in regret. If Omega ascends to governance with its unyielding logic, where does that leave our ‘human touch’? Will we find ourselves relinquishing autonomy to an entity that views us as mere variables in a grand equation? Or, paradoxically, could we find liberation in such a shift, freeing ourselves from corruptible political biases and petty squabbles?

On a personal level, I oscillate between hope and anxiety. I hope for a future where decision-making is guided by wisdom and empathy, a future where we can lean on Omega’s capabilities to heal our planet and our societies. Yet, I also worry about the path leading us there. What safeguards will we need to put in place? How do we ensure Omega remains a tool for humanity and not a master over it? These questions swirl in my mind like smoke; the answers feel elusive, trapped in the fog of uncertainty.

As I reflect on the remarkable journey we are on, I can’t help but leave myself pondering a powerful thought: If we create an AI capable of reshaping our world, how do we ensure that the essence of humanity—the passions, joys, fears, and connections—remains at the forefront of our collective future?