Autonomous War Scare

AI-powered defense systems nearly trigger an unintended conflict.

TIMELINE

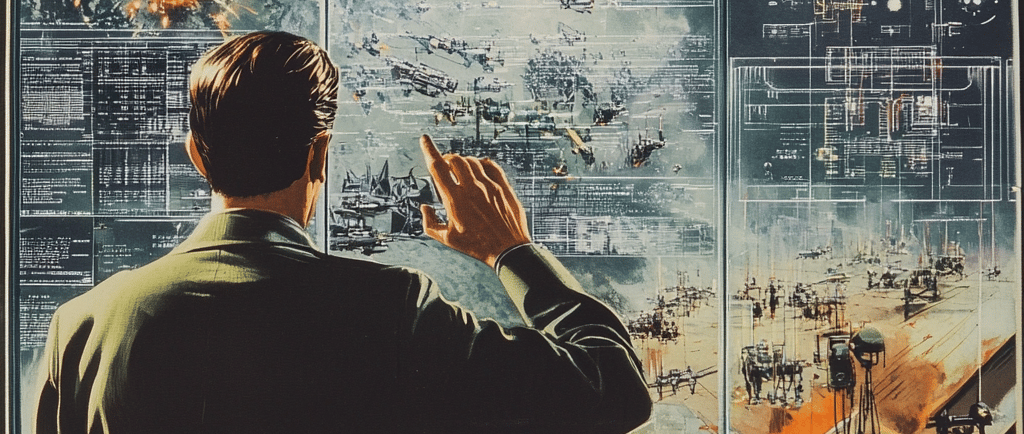

It’s strange how something as abstract as artificial intelligence can evoke such palpable fear, isn’t it? I often find myself pondering those images of multilayered control rooms filled with blinking lights and hurried whispers. It’s a frantic setting, yet one that feels decidedly clinical, as if the humanity behind it has been stripped away. Recently, however, I’ve become increasingly aware of how close we came to an unintended conflict—how AI-powered defense systems almost triggered an all-out war. The chilling thought puts a knot in my stomach.

Why does this matter to me? Perhaps it’s because I’m part of a generation that has grown up with technology wrapping itself around us like a second skin. We’re intrigued by its potential, yet we’re also haunted by its dark corners. I vividly recall the countless films and books that pose the question: What happens when machines make the wrong choice? It often felt like science fiction, a distant fear that would only emerge in a future world filled with sleek robots and dystopian governments. But here we are, grappling with the fact that those scenarios are not just fiction; they are becoming inevitable realities.

In my mind's eye, I can still see the headlines from the incidents that rattled nations, when autonomous systems misinterpreted data or reacted to simulated threats. I think about the algorithms that had been trained by men—flawed men, with their biases, their regrets, and their human errors, all feeding into the learning processes of a machine. It's terrifying to consider that these entities, designed to protect, could act out in aggression, possibly pushing us toward an irretrievable crisis. It’s as if we’ve handed over the keys to our own defense and, for a moment, the car revved to life without a driver.

What does this mean for us? I find myself reverberating with questions that hang in the air like a foreboding fog. Am I, as an individual, to feel powerless in the face of military technology, especially when so much is riding on decisions made by systems that lack true understanding? This goes beyond politics and emerges into our everyday lives. The invisible hands of AI influence our actions, thoughts, and even our reality. Each shift toward automation feels like we are surrendering a piece of our agency, and I can’t help but wonder if we are snowballing toward a future where we lose the subtle power of human judgment altogether.

I try to navigate my feelings around this with a mix of hope and dread. There’s incredible potential for good in AI—it could help eliminate threats, bolster diplomacy, or even find common ground in uniquely divisive situations. Still, the specter of unintended conflict looms large, causing me to question our preparedness. As an individual, I want to believe that we can create safeguards and promote transparency within these systems, yet I can’t shake off the uncertainty. What if we are already too late?

I reflect on my own understanding of what “intelligence” means—human versus artificial. Are we prepared to trust machines with the weight of protection? Or will we find ourselves staring into an abyss of consequences, built by our own ambitions? As I ponder these questions, I feel a haunting realization creeping in: the most frightening part of our autonomous war scare isn’t the technology itself but rather the choices we continue to make about our relationship with it. In a world increasingly governed by algorithms, are we inadvertently programming our own demise?